AgroHack

A hands on workshop for an agrotech hackathon 🌽

Use Azure Stream Analytics to stream data into the storage account

In the previous step you created a storage account to store telemetry data. In this step you will use Azure Stream Analytics to stream data into the storage account.

Azure Stream Analytics

Azure StreamAnalytics provides real-time analytics on streams of data, allowing you to stream data from one service to another

Create the Azure Stream Analytics Job

Azure Stream Analytics jobs need to be created from the Azure Portal, you cannot create them using the Azure CLI.

If you don’t want to use the portal, you can use PowerShell by following these instructions.

-

Open the Azure Portal

-

Log in with your Microsoft account if required

-

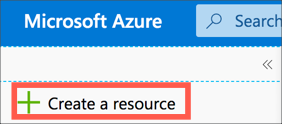

From the left-hand menu select + Create a resource

-

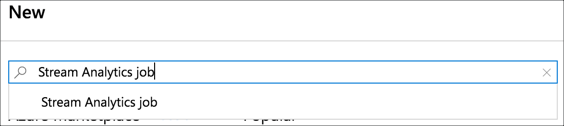

Search for

Stream Analytics Joband select Stream Analytics Job

-

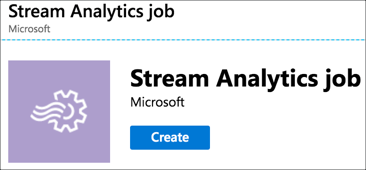

Select Create

-

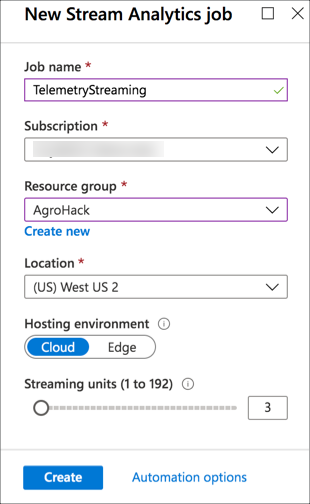

Fill in the details for the Stream Analytics Job

-

Name the job

TelemetryStreaming -

Select your Azure subscription

-

For the Resource group, select AgroHack

-

Select a Location closest to you, the same location you used in the previous step to create the resource group and event hubs.

-

Leave the rest of the options as the defaults

-

Select Create

-

-

Once the deployment has completed, select the Go to resource button.

Configure the Azure Stream Analytics Job

Azure Stream Analytics Jobs take data from an input, such as an Event Hub, run a query against the data, and send results to an output such as a storage account.

Set an input

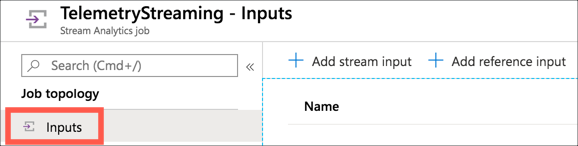

- From the Stream Analytics Job, select Job topology -> Inputs from the left-hand menu

-

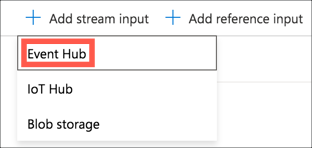

Select + Add stream input, then select Event Hub

-

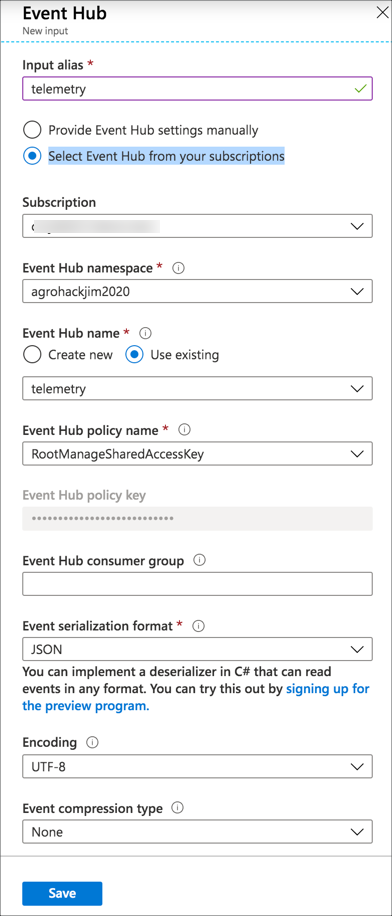

Fill in the input details

-

Set the alias to be

telemetry -

Select Select Event Hub from your subscriptions

-

Select your subscription and Azure Event Hubs Namespace

-

Select Use Existing for the Event hub name

-

Select the

telemetryevent hub -

Leave the rest of the options as the defaults

-

Select Save

-

Set an output

-

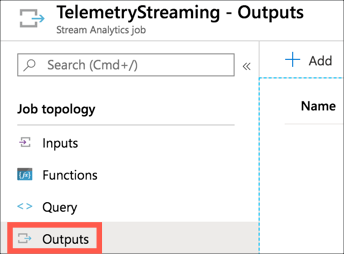

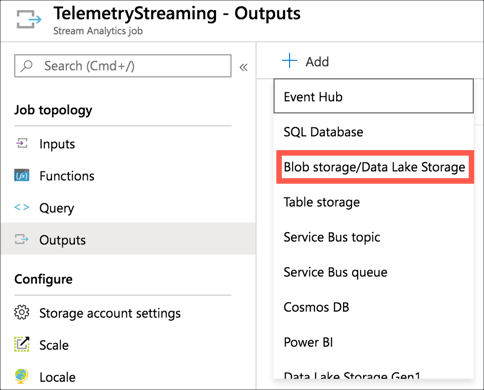

From the Stream Analytics Job, select Job topology -> Outputs from the left-hand menu

-

Select + Add, then select Blob storage

-

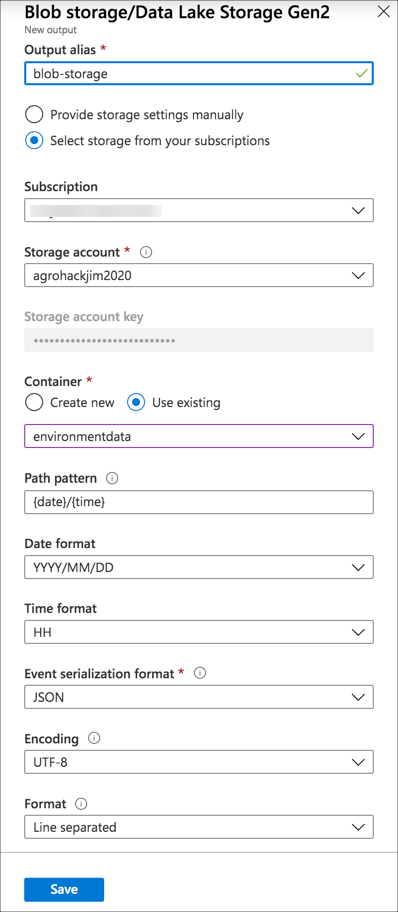

Fill in the output details

-

Set the alias to be

blob-storage -

Select Select storage from your subscriptions

-

Select your subscription and Azure Event Hubs Namespace

-

Select the storage account you created in the previous part

-

Select Use Existing for the Container

-

Select the

environmentdatacontainer -

Set the Path pattern to

{date}/{time}. JSON records are appended to a single JSON file, and setting this will cause a new file to be created each hour in a folder hierarchy based off year/month/day/hour. -

Leave the rest of the options as the defaults

-

Select Save

-

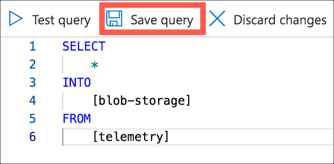

Create the query

-

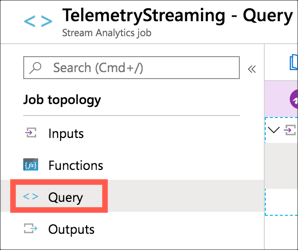

From the Stream Analytics Job, select Job topology -> Query from the left-hand menu

-

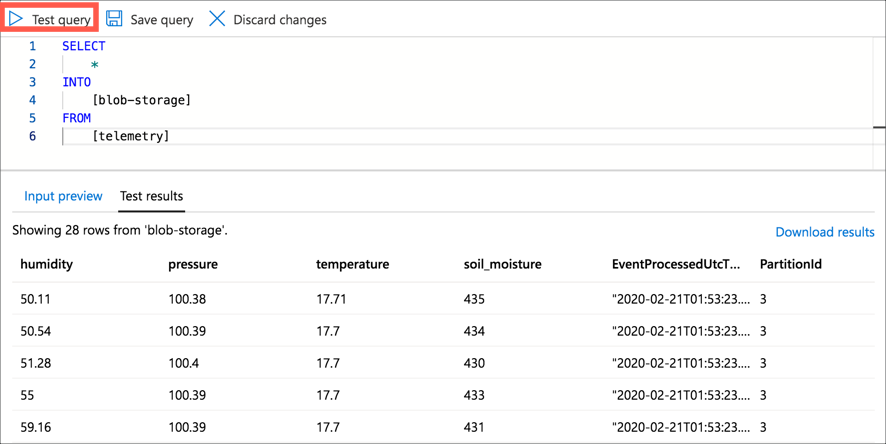

Change the query to be the following

SELECT * INTO [blob-storage] FROM [telemetry]This will select data as it comes into the

telemetryevent hub, and select it into theblob-storagestorage account. -

Select Test Query to test the query and see a sample output using real data from the event hub

-

Select Save Query

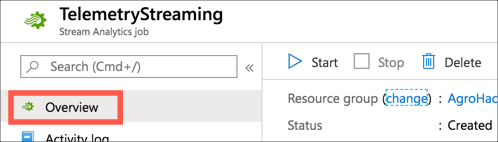

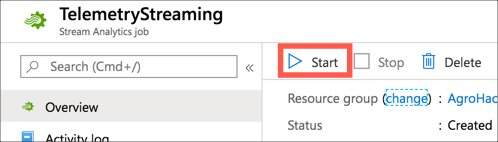

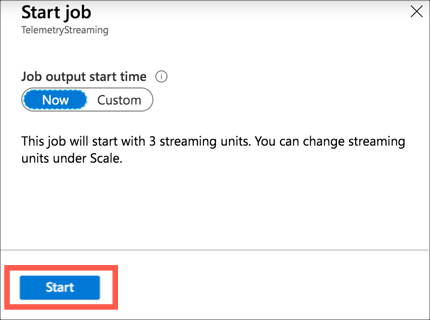

Start the job

-

From the Stream Analytics Job, select Overview from the left-hand menu

-

Select Start

-

For the Job output start time select Now

-

Select Start

Validate the query

You can validate that data is being streamed to the storage account via the Azure Portal, or via the CLI.

Validate the data with the Azure Portal

-

Open the Azure Portal

-

Log in with your Microsoft account if required

-

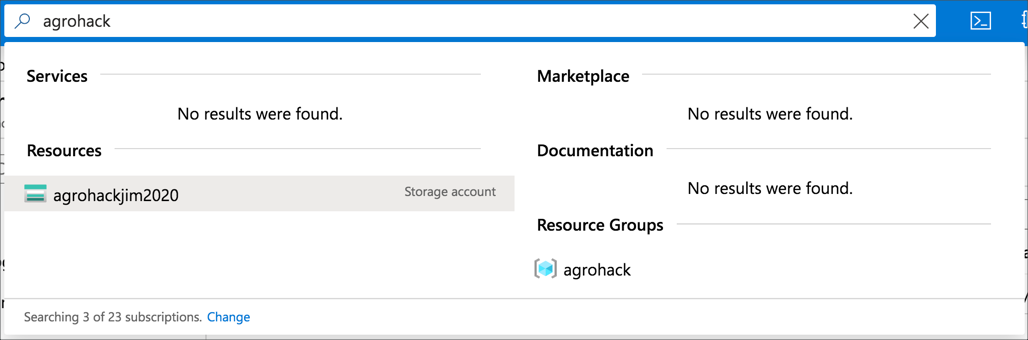

If you are not on the blade for the storage account you created, search for it by typing the name of the account into the search box at the top of the portal, and selecting the storage account under the Resources section

-

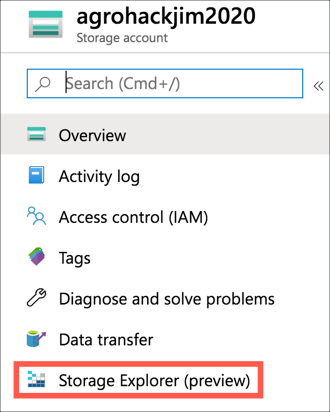

From the storage account menu, select Storage Explorer

-

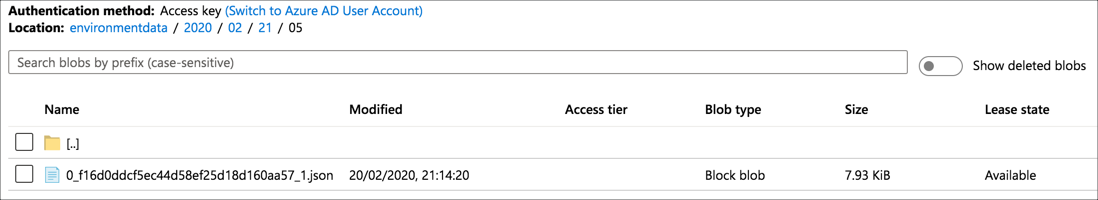

Expand the Blob Containers node, and select the environmentdata container. You will see a list of the folders, one per year that the Azure IoT Central has been collecting data. Inside each year folder is a month folder, inside that a day, inside that an hour. In the hour folder is a single JSON document that will be appended to until the hour changes, when a new document will be created in a new folder for the new hour/day/month/year.

-

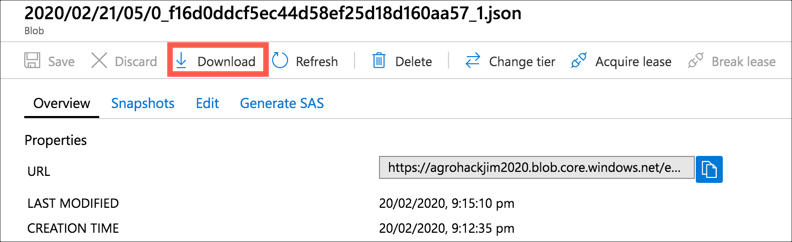

Select a JSON file and select Download to download the file

-

View the JSON file in Visual Studio Code. It will contain one line per telemetry value sent during that hour, with telemetry details:

{"humidity":60.17,"pressure":100.48,"temperature":17.05,"soil_moisture":434,"EventProcessedUtcTime":"2020-02-21T05:12:33.2547726Z","PartitionId":3,"EventEnqueuedUtcTime":"2020-02-21T05:07:40.7070000Z"} {"humidity":59.56,"pressure":100.49,"temperature":17.06,"soil_moisture":437,"EventProcessedUtcTime":"2020-02-21T05:12:33.3641037Z","PartitionId":3,"EventEnqueuedUtcTime":"2020-02-21T05:07:50.7250000Z"}

Validate the data with the Azure CLI

-

Run the following command to list the blobs stored in the storage account

az storage blob list --account-name <account_name> --account-key <account_key> --container-name environmentdata --output tableFor

<account_name>use the name you used for the storage account.For

<account_key>use one of the keys used to create the collection. -

Download the blob to a file with the following command

az storage blob download --container-name environmentdata --name <blob_name> --file data.json --account-name <account_name> --account-key <account_key>For

<blob_name>use be theNamevalue of one of the blobs from the list output by the previous step. You will need to use the full name including the folders, such as2020/02/21/05/0_f16d0ddcf5ec44d58ef25d18d160aa57_1.json.For

<account_name>use the name you used for the storage account.For

<account_key>use one of the keys used to create the collection.This will download a file into the current directory called

data.json. View this file with Visual Studio Code. It will contain one line per telemetry value sent during that hour, with telemetry details:{"humidity":60.17,"pressure":100.48,"temperature":17.05,"soil_moisture":434,"EventProcessedUtcTime":"2020-02-21T05:12:33.2547726Z","PartitionId":3,"EventEnqueuedUtcTime":"2020-02-21T05:07:40.7070000Z"} {"humidity":59.56,"pressure":100.49,"temperature":17.06,"soil_moisture":437,"EventProcessedUtcTime":"2020-02-21T05:12:33.3641037Z","PartitionId":3,"EventEnqueuedUtcTime":"2020-02-21T05:07:50.7250000Z"}

Use this data

Once the data is in blob storage, it can be access and used by multiple Azure services. This workshop won’t cover these use cases in depth as there are too many possibilities. To learn more about ways to use this data, check out the following documentation:

- Access data in Azure storage services from ML Studio

- Access cloud data in a Jupyter notebook

- Trigger an Azure Function when entries are added to blob storage

In this step, you exported IoT telemetry to Azure Blob Storage. In the next step you will create an Azure Function triggered by Azure Stream Analytics to check soil moisture.